I don’t think it can really be made with new chips. There are several issues....

For even 70ns FPM DRAM, the fast-page /CAS access time is quite short, 20ns or so. All you have to do to accomplish a fast-page access is to set up the column address on the DRAM address bus, assert /CAS, then wait 20ns for the read data to come back. There isn’t even any address-to-/CAS setup time so the whole thing occurs inside of 20ns.

Compare this to SDRAM, the go-to cheap RAM if you want tens of megabytes. Typically SDRAM systems run with a fixed-frequency clock. The lowest-latency way to use SDRAM is with CAS latency 2 at 133 MHz, making for a 7.5ns cycle time. So to read from an SDRAM given that the right row is already open (analogous to an FPM cycle), you have to issue a read command, wait 1.5 ns of setup time, bring clock high, wait 3.75 ns, bring clock low and issue a NOP command, wait 3.75 ns, bring clock high again, wait 5.4 ns, and then the data comes out.

So just in the SDRAM, 14.4ns of your 20 nanosecond budget is used up. In/out level shift delay can be minimized to a total of 0.5 ns using voltage-limiting FET switches, so then you have 5 nanoseconds to synchronize the /CAS signal. With a 7.5ns clock period, this is impossible! The 133 MHz clock would be running independently of the clock on the main board and it would be possible to just miss /CAS. Then you have to wait 7.5ns more and that blows the remaining 5ns budget. And realistically you need some additional fraction of a clock cycle of synchronization time budgeted.

So the lesson here is, it’s impossible to emulate 70ns FPM with SDRAM running from a repetitive clock. There is another solution though.

Instead of having the clock always running, in which case you can sort of get the “just missed it” slow alignment that can take up to one whole clock cycle, what you can do is generate clock pulses to the SDRAM just in response to /RAS and /CAS events from the host system. With this approach, you get the entire 5ns (not much though) to pay for your combinational delays generating the clock. The issue here is that to read (as well as to finish a write), you have to pulse the clock twice. Without a crystal controlled oscillator, it’s hard to get the exact 7.5ns delay in between clock pulses, and any variance there subtracts from the 5ns. Crystals can’t be run for just one clock cycle here and there; they take time to start oscillating. So you have to implement the delays with delay line traces on the PCB. A 7.5ns delay is about 50 inches of 50 ohm trace... but you can do it.

Alright, so you have your almost-no-delay FET switches doing the level shifting, you’ve built an ASIC that can put out the SDRAM command/address multiple time using only the five spare nanoseconds, your board has a hundred inches of delay lines, now what else has to be done to hook up SDRAM to an FPM/EDO bus?

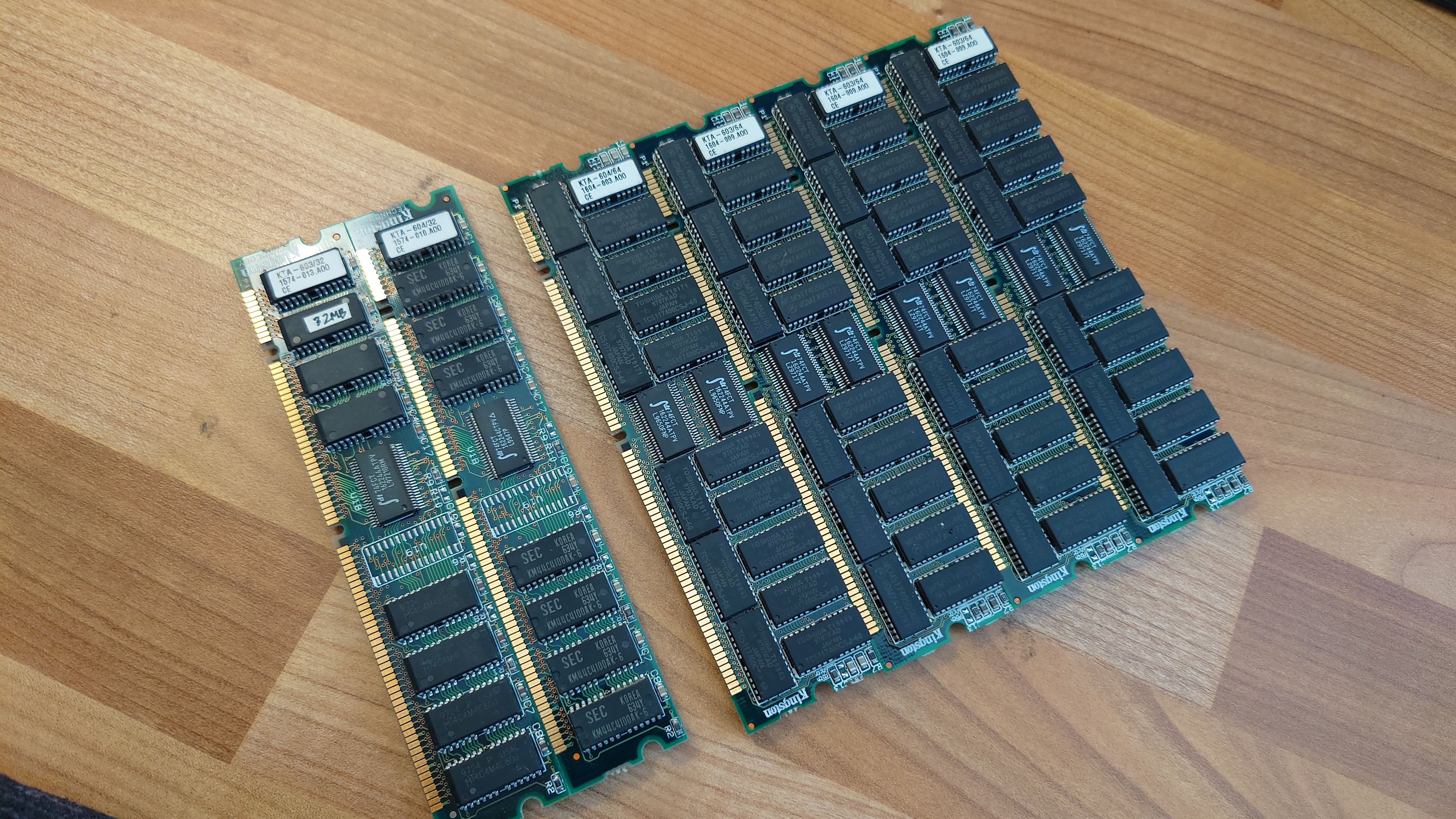

Well, I don’t know how DRAM DIMMs are addressed, but with the kinds of DRAM chips used on 30-pin SIMMs, there is an array size mismatch between DRAM and SDRAM. What I mean is, a 16 MB 30-pin DRAM SIMM has 4096 rows and 4096 columns of 8 bits. That means when you have opened a given row, any of the 4096 bytes therein must be able to be accessed in 20ns. The largest SDRAM chips still made have only 1024 byte rows so you need four of them, totaling 64 or 128 megabytes just to make the 16 MB 30-pin SIMM. The old 512Mb SDRAMs had 2048 byte rows so you only need two but it’s still 128 MB required just to implement 16 MB. For the 64-bit DIMM you may need 8 or 16 SDRAM chips. (I dunno the typical row/column size for a 64 MB DIMM.)

So... FET switches, special ASIC that has pin-to-pin delays much much lower than 5ns (gotta incur it multiple times), big long delay lines, a bunch more SDRAM chips than you’d think are required... I’ll start tomorrow! Hahaha nooo

You could use SRAM but it’ll cost several hundred bucks for 64 MB. DDRx has worse latency than SDRAM although I think they did double the number of columns per row in DDR2 so you’d only need half the chips if you used DDR2, but the extra latency would certainly make it impossible to make the 70ns FPM access timing of 20ns. And going from 70ns to 60ns means you’ve gotta do a /CAS access in 16-18ns, so all but 1 or 3ns of the 5ns is gone.

Tough problem! I don’t think it will be solved until SRAM is cheap enough to build a tens of MB SIMM/DIMM/kit for tens of bucks.