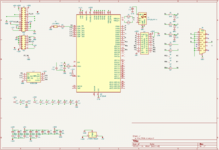

Sounds like it's time to do a board! I can modify my SE ATX+VGA adapter for the purpose.

As for feeding the constants, let's try to have one loop on the second core that services all of the real-time stuff: feeding the constants, retrieving the video data, and constructing the output framebuffer. We can of course use DMA too but some CPU work will be required at least to get it going and to do the frame-switch logic. That will basically be a loop that repeatedly services all the buffers or whatever.

And on the subject of the frame-switch logic... We must keep two separate framebuffers in order to avoid VSYNC tearing. The algorithm for which framebuffer to read into/out of is as follows:

When storing a new frame, check the current output framebuffer and line number. If the output line is at the beginning of the frame, say at line 62 (out of 768) or less, store the frame in the other framebuffer. Otherwise if the output line is further into the framebuffer, store into the framebuffer currently being used for output.

The idea for picking which framebuffer to output from is very similar to choosing which framebuffer to store to. Check the current input framebuffer and line number. If the current line being input is at the beginning of the frame, at line 10 (out of 342) or less, start output this frame from the other framebuffer. Otherwise if the input line is further into the framebuffer, start output from the current framebuffer being written to.

This basically minimizes input->output latency while repeating frames to ensure that there is no VSYNC tearing effect where the current input/output points cross over. Tweaking the line constants (62 and 10) accomplishes adjusting the latency and allowable framerate drift between the Mac and 1024x768 VGA. Lower line constants mean lower average latency but too low (or too high) and there will VSYNC tearing.