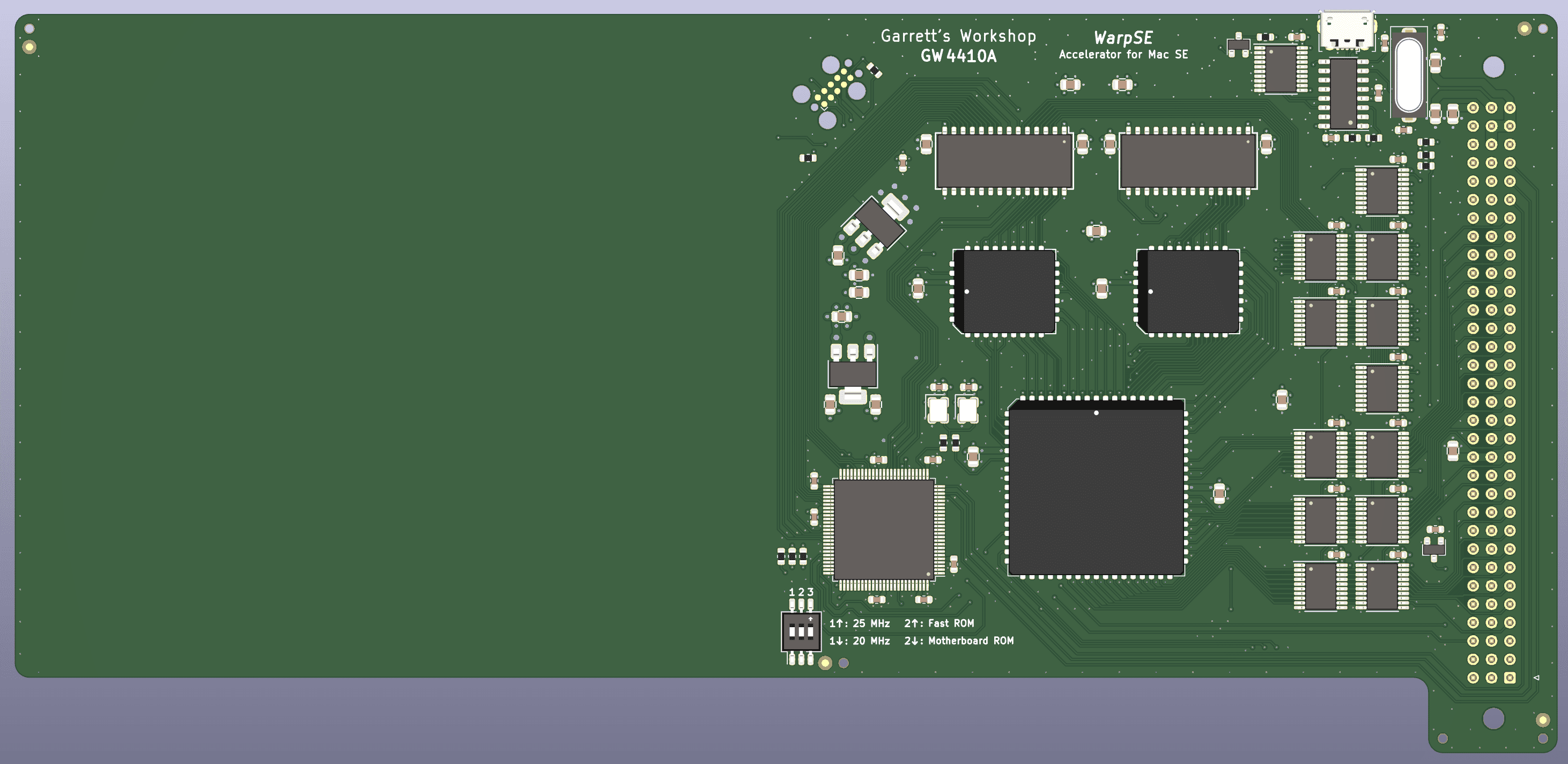

WarpSE: 25 MHz 68HC000-based accelerator for Mac SE

- Thread starter Zane Kaminski

- Start date

-

Please can you read through and vote on the following proposition for changes to the board structure by clicking here.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

So... I admit to only skimming the thread, but is there a plan for network / ethernet capability? If not, I'm wondering if - instead of a male PDS connector "underneath" the 'board, if a female wirewrap connector could be installed "on top" to allow an original SE PDS add-on to be used. I'm referring to this sort of connector...Okay if you have any feature suggestions...

Sure, the male pins would be unsupported underneath the 'board, but since it's likely to be fitted and forgotten, *if* the PDS pass thru works, that could open up other expansion possibilities....

Nope, sorry, not yet. I don't even have a prototype yet. Should be out in a few months.Is this card on sale now? If so do you ship to Australia?

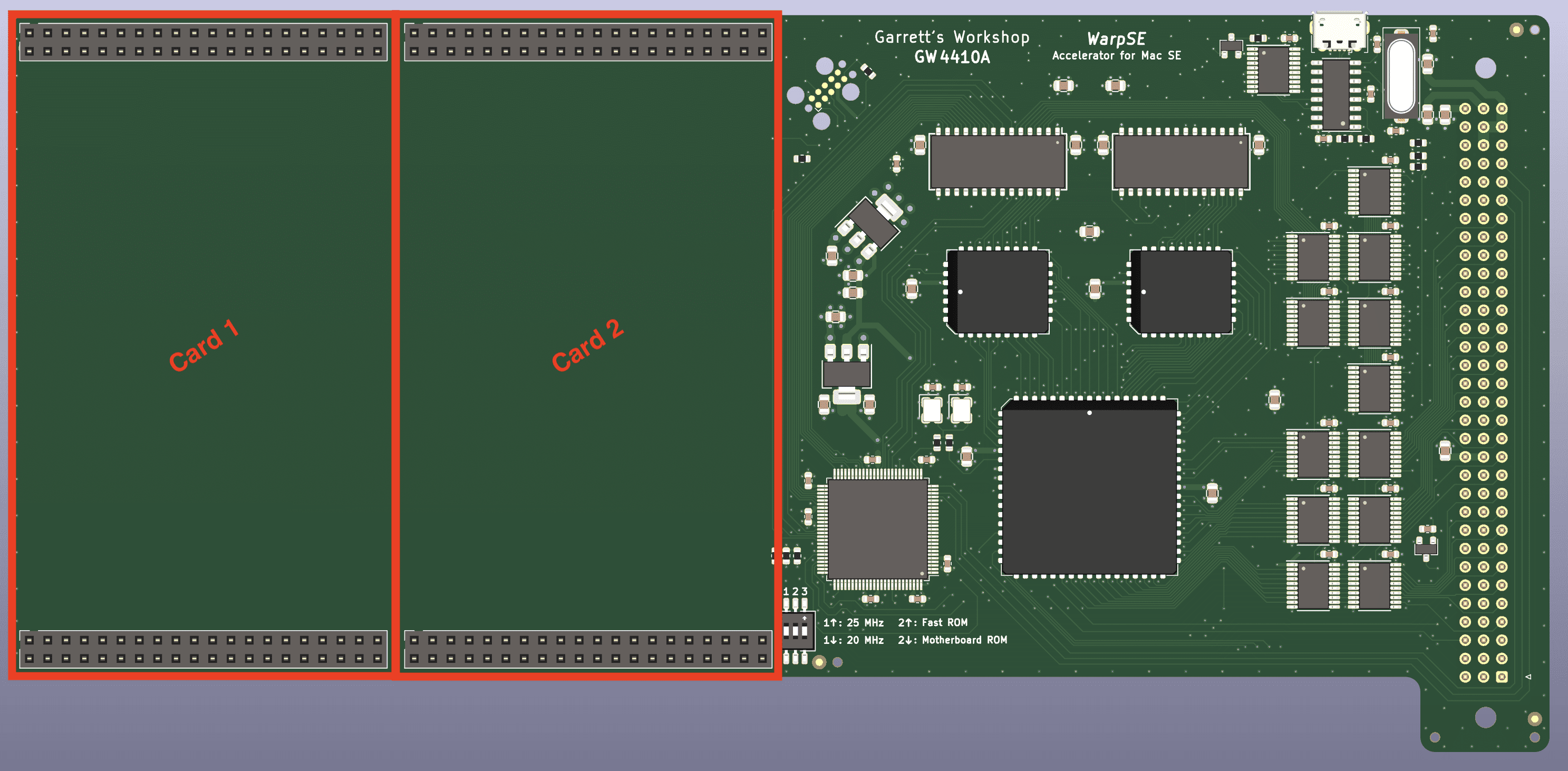

Ooh yes... but will the motherboard+card+card stack fit in the chassis? No layout changes are needed so I'll try it! All existing cards should work except those which do DMA. Supporting DMA is possible but it would slow down video and I/O performance so I would rather try no DMA support first. I am not even aware of any Mac SE cards which do DMA anyway.So... I admit to only skimming the thread, but is there a plan for network / ethernet capability? If not, I'm wondering if - instead of a male PDS connector "underneath" the 'board, if a female wirewrap connector could be installed "on top" to allow an original SE PDS add-on to be used. I'm referring to this sort of connector...

View attachment 3900

Sure, the male pins would be unsupported underneath the 'board, but since it's likely to be fitted and forgotten, *if* the PDS pass thru works, that could open up other expansion possibilities....

Oh also I have a bit of bad news. Unfortunately I got confused about the BMoW ROMinator ROM, thinking it was for Mac SE as well as Plus. Unfortunately not! It's a modification of the Mac Plus ROM and won't work on SE. So I have removed that from the feature list. Of course the hardware aspect works so there's no reason we can't do a ROM disk ROM for SE but it's fairly low-priority. Maybe it'll appear on a future revision.

Last edited:

I would think so... Actually, with no "connector" body, it should fit even closer to the original 'logic board.but will the motherboard+card+card stack fit in the chassis?

Not specifically... you'd probably want some kind of pad / 'bumper' underneath the accelerator 'board. That said, is everyone aware that the SE logic 'board does have provision for stand-offs?Would this approach allow for stability between boards?

I have at least one period accelerator that spanned the full width of the 'board and used said holes for just this purpose. I know what's being created here is not that wide... but I wonder if a "full width" design might be warranted to cater for 'stability'?

My plan to support the accelerator was to stick a little 3M Bump-On rubber foot on the bottom, positioned directly over the MC68000 on the motherboard. Is it really worth it to widen the card so as to be able to use the standoffs?

It really would have to be that wide to go over to the standoff area lol. Width would be 213.25mm as opposed to 109.1mm on the current card. So is it worth it? All that extra width makes me feel like I should be adding some extra features with the space.

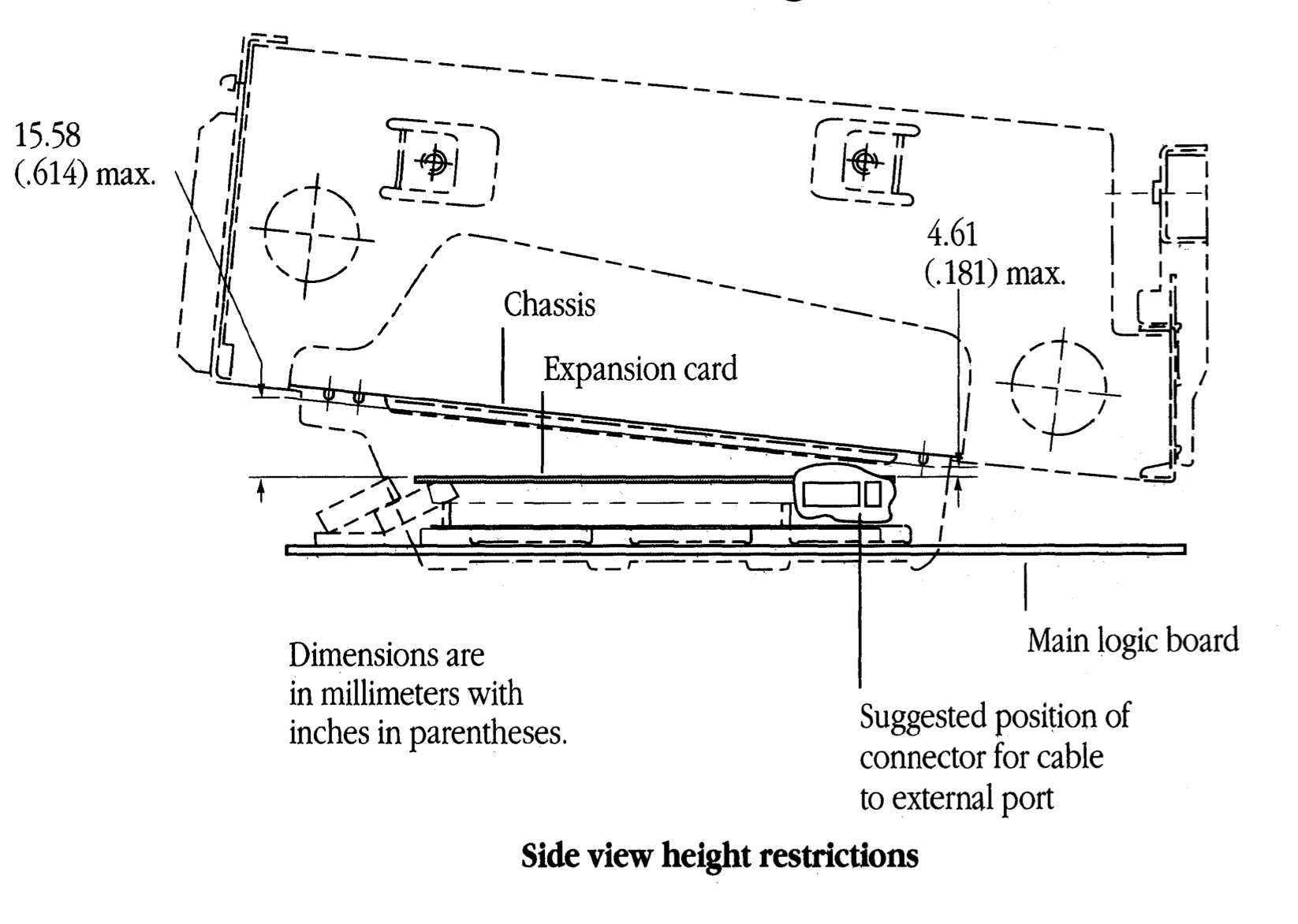

Anyway, back to the PDS connector thing. Will the whole stack of motherboard+accelerator+PDS card fit in the Mac chassis? Apparently at the top of the PDS card there's only 4.61 millimeters of room above the card to spare:

It really would have to be that wide to go over to the standoff area lol. Width would be 213.25mm as opposed to 109.1mm on the current card. So is it worth it? All that extra width makes me feel like I should be adding some extra features with the space.

Anyway, back to the PDS connector thing. Will the whole stack of motherboard+accelerator+PDS card fit in the Mac chassis? Apparently at the top of the PDS card there's only 4.61 millimeters of room above the card to spare:

Last edited:

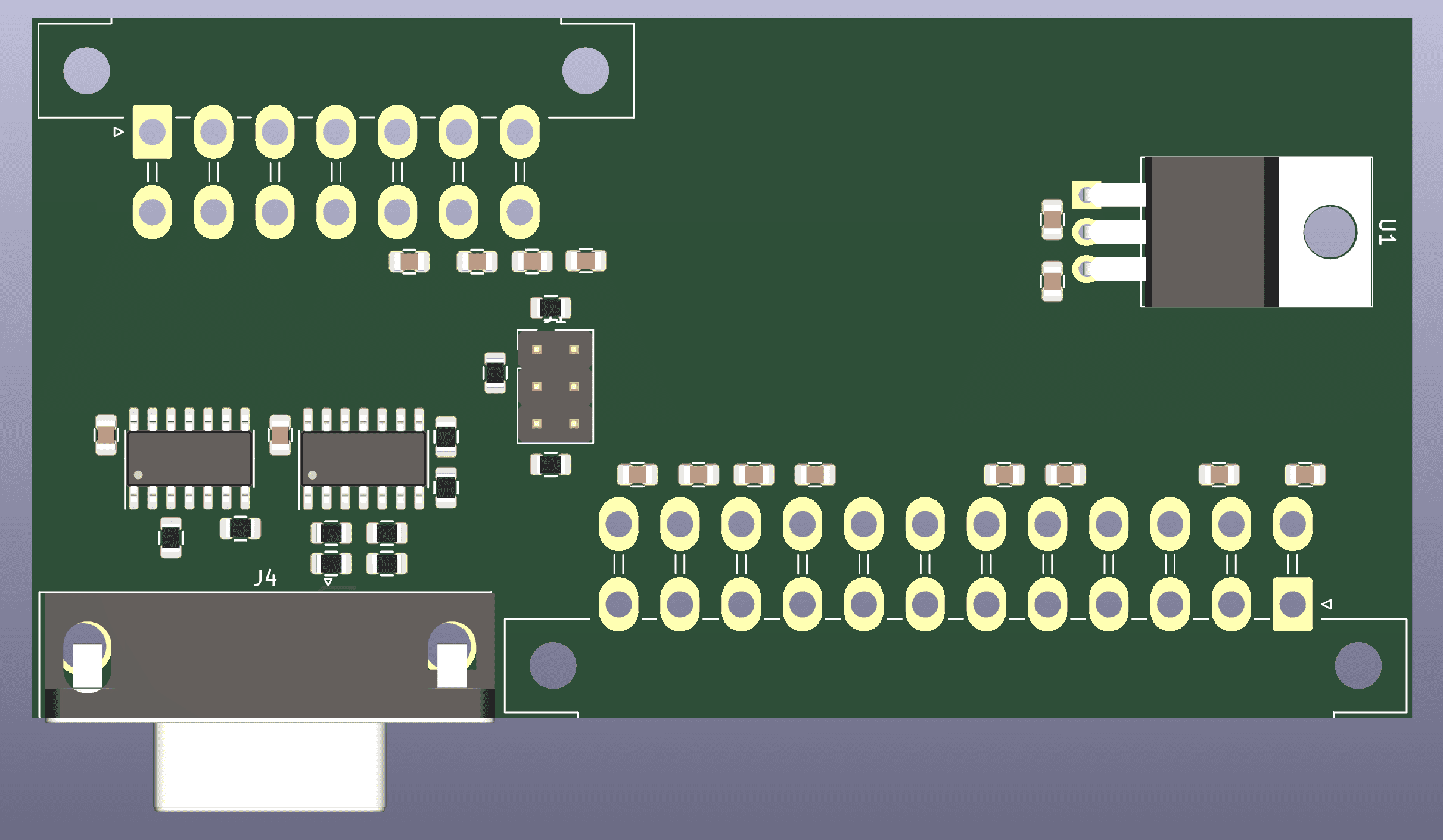

One last thing before prototype time... gotta make this little gizmo to connect an SE mounted on a board of wood to a monitor and ATX power supply:

Prototypes sooonnnnnn I promise

Prototypes sooonnnnnn I promise

PDS Card? The only way I know of to do Accelerator + PDS in an SE would be Killy Klipped accelerator and verticalized PDS Card in Late SE/SE/30 Chassis. Bolle has done that and I'm planning to.

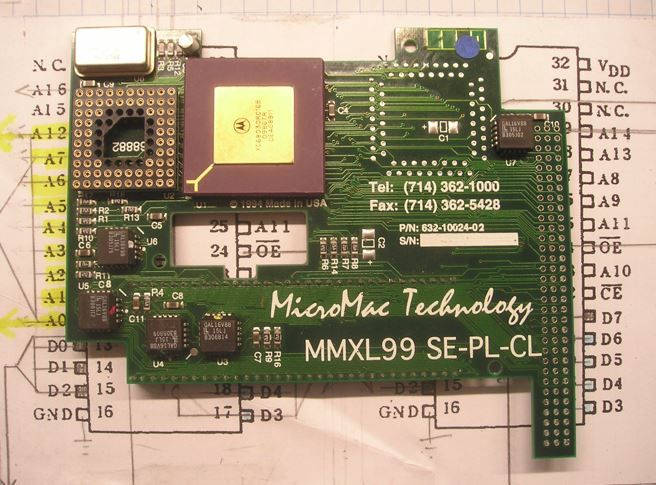

As for widening the card, I wouldn't bother. The multi-machine supporting Performer worked just fine in SE:

Haven't seen the SE PDS version, but my guess is that the hole next to U6 is something you'll want to implement on your board. Thinkin' its for a standoff in the SE version if not the Classic or both?

There may be a direct copy of this board available fairly soon. For my version, SE PDS and Classic provisions are deleted for running on a Killy Klip with VidCard in the SE PDS poking up through the SE/30 chassis chimney. Also works in a plus in that config.

Love your work here, absolutely fabulous project.

I've been curious as to what you think its performance will be against the cache deprived Performer, hamstrung by the 8MHz memory bottleneck when its 030 is clocked at 32MHz?

As for widening the card, I wouldn't bother. The multi-machine supporting Performer worked just fine in SE:

Haven't seen the SE PDS version, but my guess is that the hole next to U6 is something you'll want to implement on your board. Thinkin' its for a standoff in the SE version if not the Classic or both?

There may be a direct copy of this board available fairly soon. For my version, SE PDS and Classic provisions are deleted for running on a Killy Klip with VidCard in the SE PDS poking up through the SE/30 chassis chimney. Also works in a plus in that config.

Love your work here, absolutely fabulous project.

I've been curious as to what you think its performance will be against the cache deprived Performer, hamstrung by the 8MHz memory bottleneck when its 030 is clocked at 32MHz?

Hard to compare the 'HC000 and the '030... It just depends if you need actual memory bandwidth or whether everything can fit in cache. Similar example is Ethereum mining. It's all memory bandwidth and you can basically divide a GPU's memory bandwidth by the amount of data required per hash and that's the GPU's Ethereum hash rate. The RAM bandwidth required for Ethereum mining dwarfs the compute involved. So whether a GPU has a lot of FLOPS, integer performance, etc. doesn't matter since most of the time mining Ethereum is spent waiting for RAM. On the other hand, 3d rendering and playing games on a GPU makes much more use of the compute facilities, plus the way texture data is used is more cacheable than the intentionally random pattern of memory accesses in Ethereum mining.PDS Card? The only way I know of to do Accelerator + PDS in an SE would be Killy Klipped accelerator and verticalized PDS Card in Late SE/SE/30 Chassis. Bolle has done that and I'm planning to.

As for widening the card, I wouldn't bother. The multi-machine supporting Performer worked just fine in SE:

View attachment 4078

Haven't seen the SE PDS version, but my guess is that the hole next to U6 is something you'll want to implement on your board. Thinkin' its for a standoff in the SE version if not the Classic or both?

There may be a direct copy of this board available fairly soon. For my version, SE PDS and Classic provisions are deleted for running on a Killy Klip with VidCard in the SE PDS poking up through the SE/30 chassis chimney. Also works in a plus in that config.

Love your work here, absolutely fabulous project.

I've been curious as to what you think its performance will be against the cache deprived Performer, hamstrung by the 8MHz memory bottleneck when its 030 is clocked at 32MHz?

So which way does the Mac OS lean? Does it demand a lot of memory bandwidth? Or is the work limited to a 256 byte or smaller working set of program and data memory to the extent that the '030 can really fly? We will see when the accelerator is done!

Does the 33 MHz MicroMac Performer edge out the SE/30 at all? If so, in what kind of programs/benchmarks?

Can't imagine it coming anywhere close to the 16MHz Mac II and SE/30 family even at 32MHz, which MicroMac never offered BTW. That would have been the realm of mid and high level Perfomer Models with added features like cache and SIMMs IIRC.Does the 33 MHz MicroMac Performer edge out the SE/30 at all? If so, in what kind of programs/benchmarks?

32MHz is a "because I can" kinda thing, not sure how much the bump from 16MHz would help IRL, but it sounds nice. Still stuck on that 16bit highway at 8MHz so I'm thinking diminished returns, but fun!

Don't have links handy to the higher end models.

I was thinking of a vertically-mounted PDS card. Here's a picture of an SE - showing the "opening" in the chassis where a "vertical" PDS card could go. You can also just see the two mounting holes for the 'top' of the card. Of course, stacking things would probably mean the holes dont quite line up - but I imagine those tabs could be manipulated slightly...Anyway, back to the PDS connector thing. Will the whole stack of motherboard+accelerator+PDS card fit in the Mac chassis?

FWIW, looking at that chassis... it might be the "wider" SE/30 version. I believe there were three different chassis' used in the SE - the very early one (800k) didn't even have a PDS 'hole', then there was one with a narrow hole, then, I believe they just used the one for the SE/30 - even though the hole was much wider than the length of the SE PDS connector.

Interesting, I only know of the two versions, with and without SE/30 PDS expansion sidewall provision? Nor have I ever seen an SE PDS card meant for vertical installation through such an opening.

Radius Accelerators and MagicBus adapter cards made provision for their FPD and TPD Vidcards installed vertically in the slot at the rear of the SE chassis. I'll find or take and post some pics of the MagicBus installation.

Depending on availability, adding that connector to WarpSE would be a wonderful feature, supporting Radius FPD/TPD cards right out of the gate. I still haven't buzzed connections to confirm, but I remember it looking like a full PDS passthru.

Have you found a pic of the intermediate SE chassis to post? Very curious.

Radius Accelerators and MagicBus adapter cards made provision for their FPD and TPD Vidcards installed vertically in the slot at the rear of the SE chassis. I'll find or take and post some pics of the MagicBus installation.

Depending on availability, adding that connector to WarpSE would be a wonderful feature, supporting Radius FPD/TPD cards right out of the gate. I still haven't buzzed connections to confirm, but I remember it looking like a full PDS passthru.

Have you found a pic of the intermediate SE chassis to post? Very curious.

Calls for yet a larger PCB, but well worrth considering. The MagicBus Adapter Card wasn't full width SE spec, co heading straight back with your PCB might be better than spanning the width of the case?

Well... could be I'm halucinating... but I could swear I was resurrecting a SE/30 which had Maxell-bombed and grabbed a spare chassis only to discover the 'hole' was too narrow. I can't put my finger on any pics, but will check the next time I'm down at my lockup - I have a stack of chassis.I only know of the two versions, with and without SE/30 PDS expansion sidewall provision?

That too could be a brain-fart... I *thought* my SE StNIC was vertical, but, no, it's horizontal...Nor have I ever seen an SE PDS card meant for vertical installation through such an opening.

Oh well...

I'd say... that's one monster card combo!Calls for yet a larger PCB

what about dropping the ScuzNet circuitry in that 'space'? You've addressed the CPU, so what about solid-state HD, and, connected...All that extra width makes me feel like I should be adding some extra features with the space.

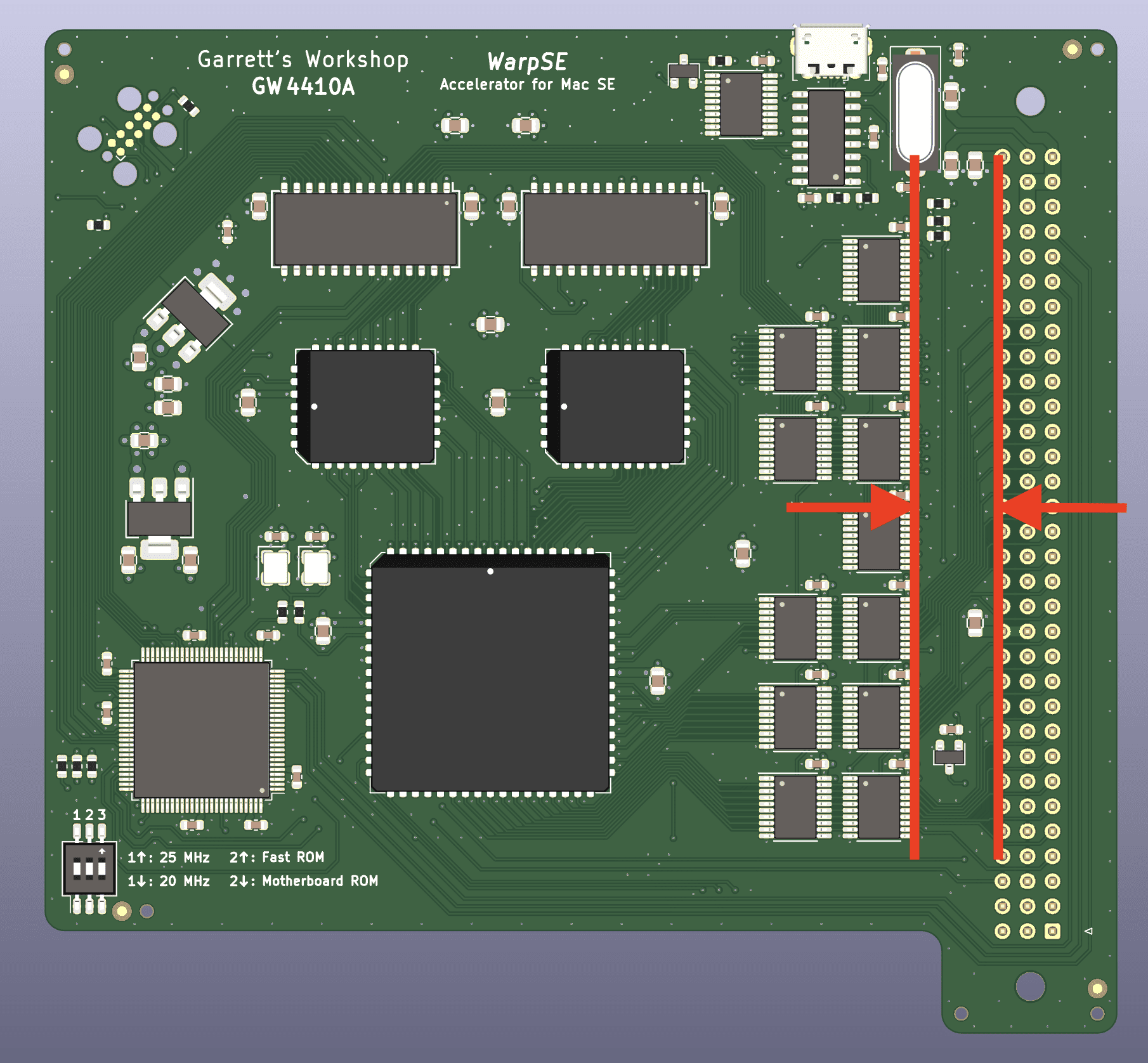

Hmm interesting approach with the vertical cards, both as suggested by mg.man and Trash80. Current layout doesn't support anything but the vertical PDS extension right above the existing one though. There's not enough room in this critical dimension in the layout:

Everything would have to be rejiggered a bit to let the PDS bus go upward.

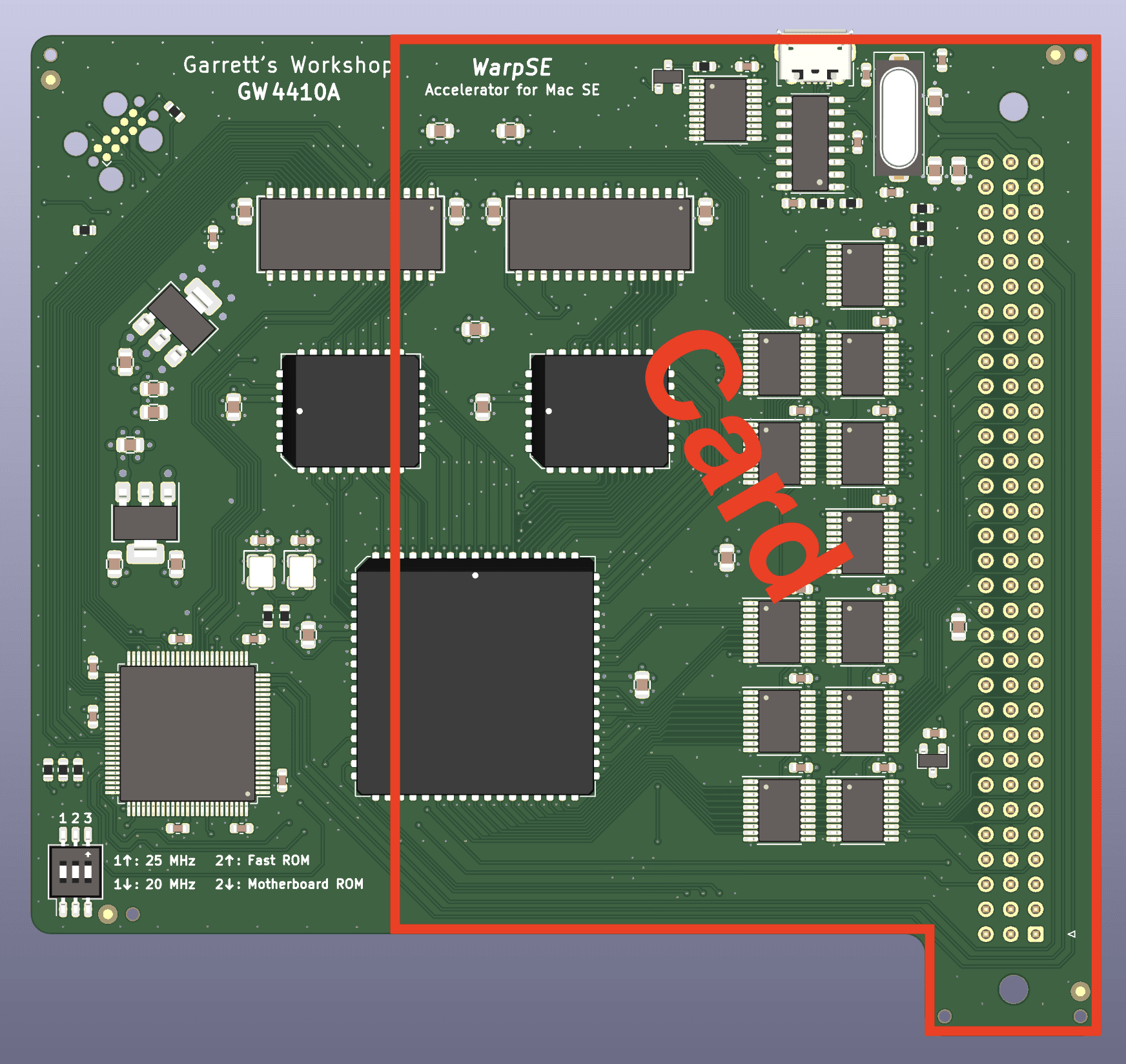

But isn't it better to just extend the card rightward to allow for new peripherals to connect to the 25 MHz fast bus? That would be better:

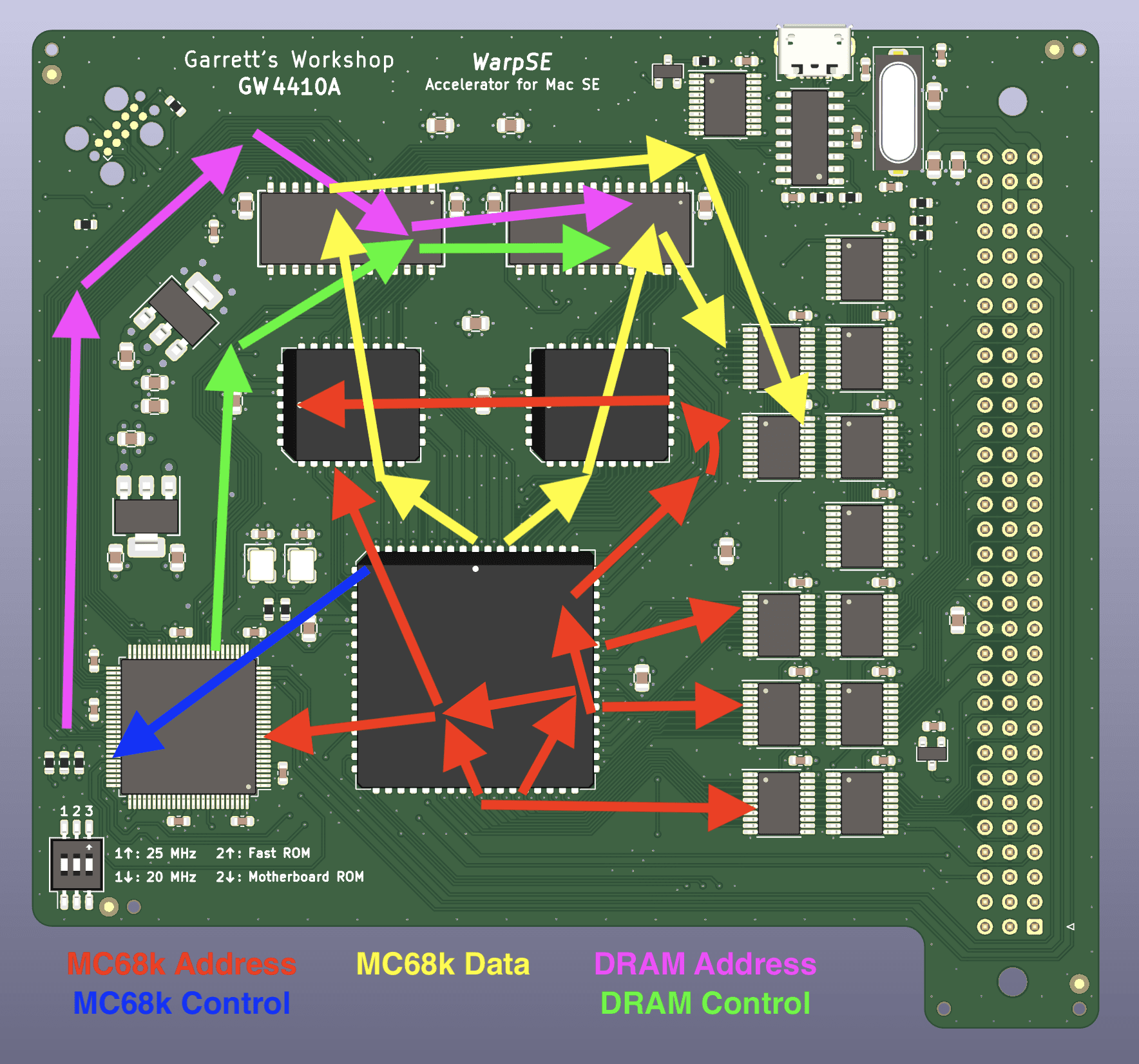

This is all impossible to implement in the current layout though! The routing would have to be totally redone to bring the fast bus over to the left side of the card. See this diagram:

The entirety of the address bus (red) would have to squeeze through the little space between the control signals (blue) and the data bus (yellow) and then all three would have to go over to the left side of the card. Right now only a few address bus take that route up from the MC68k to the ROM. The current placement doesn't really have enough extra room to run the whole address bus through there. So other than the possibility of a stacked PDS card, I think the addition of the slots is a bridge too far for now.

ScuzNet is okay but I think it would be better to leave it as an external device made by someone else. I am a big fan of the recent SCSI developments, particularly BlueSCSI, but I personally am skeptical of myself/GW shipping something with SCSI. The issue is that all of the new-manufacture SCSI devices (including ScuzNet, RaSCSI, SCSI2SD etc.) are out of spec on either SCSI bus drive strength or edge rate. Some use chips which are not specified to drive two big terminators on either end of the bus and may not reach a low enough "0" level. Others use powerful buffers but which transition from too quickly, creating a risk that the fast signal edges may echo in the drives as they go past, screwing up the received signal. You can see in the evolution of the SCSI2SD v5/v6 designs over the past two years, the designer had at one point added ferrite beads in series with the SCSI drivers to try and address this issue, then removed them, ostensibly because they caused more problems than they solved. The question is, with those 74LVT-series buffers on the ScuzNet putting out edges 10x faster than the SCSI spec allows for, does it really work reliably at the maximum bus length, with 7 drives on the bus, and using a crappy cable? With shorter cables and fewer drives, problems are less likely, but historically there were times where manufacturers such as Adaptec made SCSI ASICs suffering from this same issue and had to fix it in one way or another. Now that this stuff is "vintage," I don't think people are hooking up big long SCSI buses with 7 drives where the signal integrity issue is most likely to manifest, hence why the issue has flown under the radar for so long. I have not been able to come up with a buffer chip/design that has the requisite 5 nanosecond output edge rate while also being able to output the 48 mA of current required to drive the large SCSI terminators. So right now I have sort of a moratorium on SCSI device development because I would rather not make a less-than-industrial-strength product where it's possible to push the envelope in a way that should be supported but it's flaky.

Maybe we can make custom low-profile PDS cards for stacked connector approach. Rather than a whole full-sized PDS card, they'll be thin miniature cards designed to go here:

I think I could fit a video card or network interface in that space. The key idea here is that in designing new cards, we can make sure they aren't too thick so as to hit the chassis in the way that a legacy SE PDS card might.

Everything would have to be rejiggered a bit to let the PDS bus go upward.

But isn't it better to just extend the card rightward to allow for new peripherals to connect to the 25 MHz fast bus? That would be better:

This is all impossible to implement in the current layout though! The routing would have to be totally redone to bring the fast bus over to the left side of the card. See this diagram:

The entirety of the address bus (red) would have to squeeze through the little space between the control signals (blue) and the data bus (yellow) and then all three would have to go over to the left side of the card. Right now only a few address bus take that route up from the MC68k to the ROM. The current placement doesn't really have enough extra room to run the whole address bus through there. So other than the possibility of a stacked PDS card, I think the addition of the slots is a bridge too far for now.

ScuzNet is okay but I think it would be better to leave it as an external device made by someone else. I am a big fan of the recent SCSI developments, particularly BlueSCSI, but I personally am skeptical of myself/GW shipping something with SCSI. The issue is that all of the new-manufacture SCSI devices (including ScuzNet, RaSCSI, SCSI2SD etc.) are out of spec on either SCSI bus drive strength or edge rate. Some use chips which are not specified to drive two big terminators on either end of the bus and may not reach a low enough "0" level. Others use powerful buffers but which transition from too quickly, creating a risk that the fast signal edges may echo in the drives as they go past, screwing up the received signal. You can see in the evolution of the SCSI2SD v5/v6 designs over the past two years, the designer had at one point added ferrite beads in series with the SCSI drivers to try and address this issue, then removed them, ostensibly because they caused more problems than they solved. The question is, with those 74LVT-series buffers on the ScuzNet putting out edges 10x faster than the SCSI spec allows for, does it really work reliably at the maximum bus length, with 7 drives on the bus, and using a crappy cable? With shorter cables and fewer drives, problems are less likely, but historically there were times where manufacturers such as Adaptec made SCSI ASICs suffering from this same issue and had to fix it in one way or another. Now that this stuff is "vintage," I don't think people are hooking up big long SCSI buses with 7 drives where the signal integrity issue is most likely to manifest, hence why the issue has flown under the radar for so long. I have not been able to come up with a buffer chip/design that has the requisite 5 nanosecond output edge rate while also being able to output the 48 mA of current required to drive the large SCSI terminators. So right now I have sort of a moratorium on SCSI device development because I would rather not make a less-than-industrial-strength product where it's possible to push the envelope in a way that should be supported but it's flaky.

Maybe we can make custom low-profile PDS cards for stacked connector approach. Rather than a whole full-sized PDS card, they'll be thin miniature cards designed to go here:

I think I could fit a video card or network interface in that space. The key idea here is that in designing new cards, we can make sure they aren't too thick so as to hit the chassis in the way that a legacy SE PDS card might.

Last edited: